The data centre has created demand for an infrastructure that provides greater bandwidth and higher data rates, but how has this impacted the market for optical components? Keely Portway asks the question

The data centre sector is diverse, with the emergence of the data centre ‘as we know it’ – the hyperscale data centre or cloud service provider – driving the market. ‘I would say these are clearly driving the requirements now for optical suppliers as a matter of form factors and optical interface that has to be focused on initially,’ said Finisar’s Christian Urricariet.

The company is known as one of the earliest to build high speed data networks for data centres, and has witnessed first-hand this market’s evolution. ‘The emergence of these cloud operators has changed the way we do business,’ admitted Urricariet, its senior director of global marketing. ‘Each large cloud service provider has their own, often diverging, requirements for their optical links, which means that those of us in the industry have to distribute our R&D resources a little more intelligently among a larger number of products.

‘When we had 10 or 20 OEMs impacting our product developments, these usually follow means we have to make hard choices once in a while. It clearly has changed the way we develop and define our product portfolio.’

Need for speed

Providers of blown fibre solutions have had a similar experience, as Scott Modha, international business development at Emtelle, revealed: ‘The data centre sector has evolved over the years with the increase in FTTH, 5G and intelligent transport systems and all the different market sectors we are involved in, all require datacentres. With the increase in demand for speed and connectivity around the globe, comes the demand for data centres.

‘Over the last five years the data centre market has grown vastly. We have been working with some of the major global cloud operators in Europe, and with FTTH being our core business, and this market growing, we’ve seen the increase in the need for data centres as a result. As these superfast broadband connections slowly get more and more utilised, the demand for additional cloud storage and data centres will grow with it.’ As well as fibre interconnectivity solutions, Emtelle supplies PVC duct systems to the data centre market, and the firm has been working with its customers to offer installation solutions. As an example, it provided bespoke microduct products to advanced fibre cabling systems manufacturer and installer, Splice UK, for its pulled fibre installation solution. ‘These comprise of an infrastructure solution that provides fibre pathways in place of conventional fibre cabling,’ said Modha. ‘Each microduct incorporates a pull cord, allowing for a quick and easy installation of a micro fibre cable by simply attaching and pulling the fibre through. This allows for a “pay-as-you-grow” system that also reduces disruption in the data centre environment.’

Coherent developments

Urricariet believes that the data centre market has also been a factor in the development of coherent technology in optical connections. ‘Coherent interconnects between data centres, and also all kinds of core and metro access applications to support things like, for example, 5G mobile. So, the core of the networks will be increasingly using coherent technology and we are investing significantly in components for just such applications.’

Winston Way, CTO for systems at NeoPhotonics, added: ‘Ever since the first 100Gb/s optical coherent system deployment in 2010, the data rate per wavelength of a line-side coherent transceiver has been steadily increasing. The first 200Gb/s system product appeared in 2013 and the first 400Gb/s system product in 2017. Increasingly, the need for higher speeds per wavelength, and therefore higher capacity per fibre, is being driven by the requirements of data centre interconnects (DCI). DCI puts a premium on raw data capacity and lower cost per bit, with less importance attached to the flexibility often required in telecom systems.

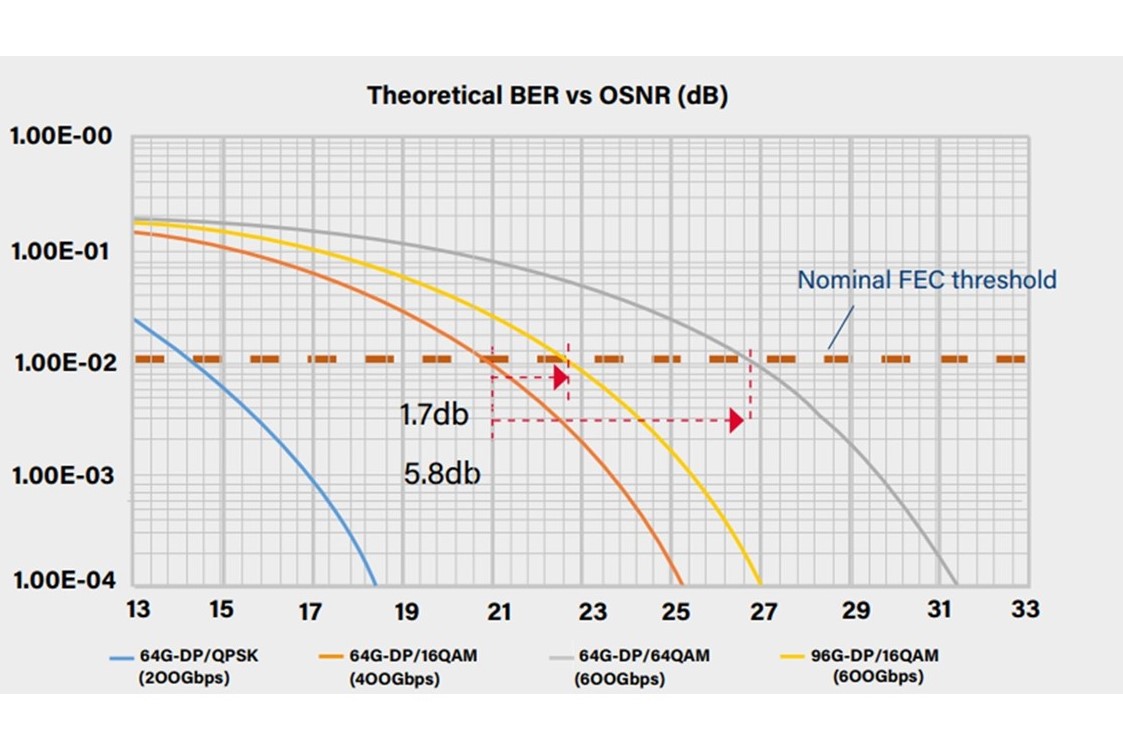

‘Generally speaking, two main technical approaches have been used to increase the total data rate per wavelength, i.e., through either higher speed or higher-order modulation. To increase a net data rate from 400 to 600Gb/s with a 28 per cent forward-error-correction (FEC) overhead, one could increase the baud rate from 64 to 96Gbaud, or increase the modulation order from 16 to 64QAM (quadrature amplitude modulation).’

But, asked Way, ‘which path could offer a better system performance and is less costly to implement? Historically, the first path of increasing speed was what was used to grow the electrical I/O speed of conventional client-side transceivers, or the coherent per I/Q-lane baud rate. There is a trend of an approximate 10 times speed increase in a decade, or two times per three years. The technical challenge in this path for coherent transceivers is mainly due to the limited analogue bandwidths of digital-toanalogue converter (DAC) and analogue-todigital converter (ADC) in a coherent digital signal processor (DSP). This can be mitigated to a certain degree by the equalisers in a coherent DSP. Optics, on the other hand, should exhibit >50GHz modulation bandwidth (suitable for 100Gbaud data) in 2019.’

A tougher path

The second path of increasing modulation order is, said Way, a much tougher one. ‘The higher the modulation order, the higher the optical signal-to-noise ratio (OSNR) requirement,’ he explained. ‘This is easy to understand from the denser data points in a constellation diagram, and therefore a higher OSNR is needed to discriminate neighbour data points. This problem cannot be mitigated by equalisers or an already heavily-burdened FEC, but can be alleviated by new modulation techniques, such as probabilistic constellation shaping.

‘Figure 1 illustrates the fact that, due to the high modulation order of the second path (using 64QAM), its required OSNR is about 4dB higher than that of the first path (using 16QAM), while both achieving 600 Gb/s net data rate. In practice, this extra OSNR penalty is even worse because of the implementation difficulties for higher order modulation, which include the effective number of bits a DAC and ADC, the linewidth of a laser source, the extinction ratio of polarisation-multiplexed modulators, the amplifier linearity, etc. The higher OSNR requirement also results in a shorter transmission distance, which is a problem for conventional optical transport networks, but could be still useful for short-haul inter- or intra-data centre applications in the near future.’

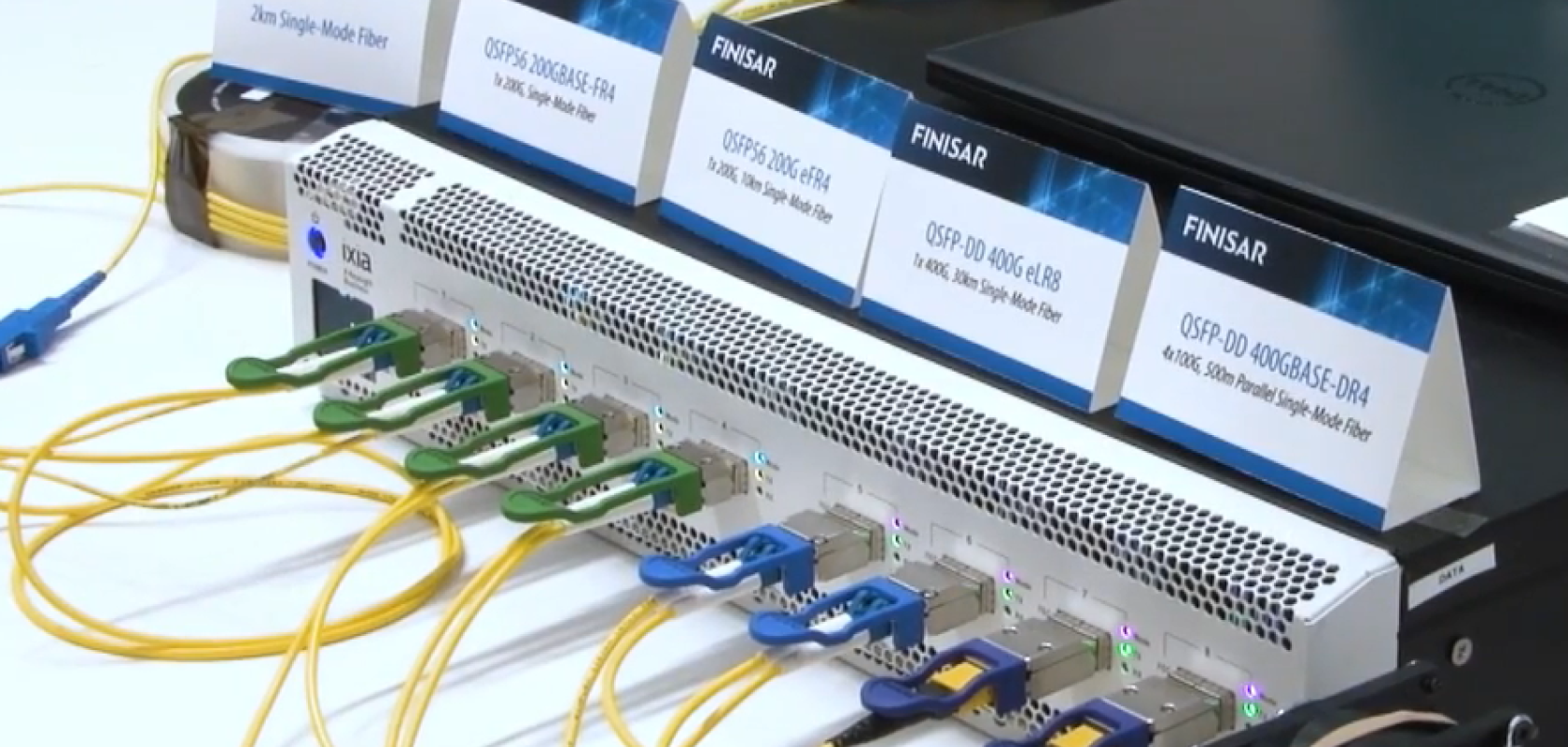

With 400G components gathering pace in this drive for higher data rates, the form factor has also been an area of focus, as Brad Booth, chair at COBO and manager, network hardware engineering at Microsoft Azure Infrastructure explained: ‘When we launched COBO four years ago, there was only one pluggable form factor for 400G, and CFP was ugly at best,’ he ascertained. The consortium was originally formed to develop an industry standard for high-bandwidth on-board optics. Today’s options include the latest iteration of CFP – the CFP8, the Octal Small Form Factor (OSFP), QSFP (QSFP-DD) pluggable modules and the COBO-supported 400G interfaces, and COBO hosted the global premiere of the first COBO compliant optical modules at last year’s ECOC Exhibition, featuring solutions from Molex, Ciena and SENKO, TE Connectivity, Credo and AOI.

Addressing pain points

Optoscribe, the integrated photonics components supplier, recently expanded its manufacturing facility, which CEO Nick Psaila said has helped expand technical development activity in key areas, such as the data centre market. ‘Having additional production, engineering and test capacity – that’s been one of our biggest constraints over the last year or so,’ he said. ‘So, having that ability allows us to engage with more customers and to deliver more. We’ve seen an opportunity and a shift in terms of where the priorities are, in terms of transceiver manufacturers and where their pain points are, and where we can address that.’

One of the biggest challenges, said Psaila, is assembly and packaging of these transceivers. ‘Particularly as there’s a real push into greater and greater use of single mode transceivers in data centres, and that packaging and alignment challenge is particularly sensitive for single vs multimode applications. Again, that assembly challenge becomes key and, in some cases, some types of transceivers can be quite a large overall cost of the module. Technologies that allow more automated assembly processes, more passive alignment processes in single mode architecture are advantageous. Helping with that photonic interconnection, the optical alignment side, we can move with the industry’s demand to use more higher volume automated assembly points. That’s the basis for a lot of the technology we’re bringing to the table.’

‘There has been tremendous pressure on the transceiver manufacturers to really cost, decrease and simultaneously increase the speed, so the challenge on the transceiver manufacturers is to do that, particularly on single mode architectures. That’s one of the biggest changes in terms of where the industry is moving – a lot of those big cloud operators have chosen to use single mode as the dominant fibre type in the data centre, so that’s creating a lot of pressure on the transceiver manufacturers to deliver the next generation of performance, but also drive down that cost per gigabit. It’s been a real challenge using the existing technology platforms and approaches to margins are getting squeezed substantially. That’s putting a lot of pressure to look for new alternatives, to reduce the overall module costs. On the cloud side of things, that seems to have a lot of focus on the cost of the single mode.’

Future growth

Modha doesn’t see the strength of the data centre market changing in the near future. ‘There will be exponential growth in the next five years for fibre, and with increased levels of traffic and the need for high speeds, comes the need for bigger, better data centres.’

Also looking ahead, Booth said: ‘We know that working on form factors poses the question “what happens after 400G or 800G?” It’s not going to get easier – we need another tool to keep the power within. Data centres are predominantly optical except for the racks. Can we maintain this, using racks? Will there come a time when that is not feasible? If/when a data centre becomes fully optical, we need a specification for embedded optics – CISCO and Juniper have done this in some of their systems. There is not a lot of competition for their modules.

‘We need something that builds multiple fibre in embedded modules. We’re working with members to build systems, test boards. People are used to doing things a certain way – with embedded it’s different. We need to be ready before the industry needs it.’

Smaller requirements

‘There will continue to be a market for large data centres competing in speed 400G and beyond, increasing in density and low power components that will be required,’ Urricariet said. ‘Certainly, the large, hyperscale data centre market will continue. The other areas are the smaller clouds or the enterprise data centre markets, which also drive requirements and, in many cases, different requirements. They require us to develop different products.

‘Also new areas like edge data centres, which I believe may also play an increasingly important role with respect to the type of products, and power dissipation that’s required. This has all been driven by more distributed cloud application. It has more intelligence around the edge, especially for low latency applications, which are now enabled by the deployment of 5G mobile networks, for example. Those are all areas where there will still be a data centre, but the requirements for those data centres will change.’

Urricariet concluded: ‘After large cloud service providers, the additional data centre market, which is smaller cloud and enterprise, have different needs. We are in production with both 40G and 100G SWDM4 QSFP transceivers for duplex multimode fibre. 40G and 100G are old news for large data centres in the cloud, but the enterprise is just beginning. Most of the enterprise data centres are 10G and they are now moving to 40 and 100. So, technology like SWDM4 is very important. It provides very low total cost for optical links.

‘Again, that transition is only beginning in that market segment to 40G and 100G. They enable the use of the existing fibre infrastructure, for example, in deployment, and the lowest capex solutions in infrastructure. So, we have a novel technology to meet that need. It doesn’t maybe capture the headlines, because it is not something that the large cloud servers use, but it’s very important for the overall market.’